Last week we visited Florence and then spent a few days in Tuscany ( instead of attending Agile20xx like last year ). The Uffizi Gallery in Florence was formerly the office of the Medici family and is now one of the preeminent collections of Renaissance art in the World. Truth be told, the buildings and architecture of Florenze are more impressive than the art. For some reason, the pictures of Saint Sebastian (Thumbnails below with bigger images at the bottom of the post) held a particular interest for me.

After a while I realised that they are a counterpoint for Scaling Agile. The Artists all agree on certain points such as “St Sebastian was male”, “He was bound and shot with arrows”, “The arrows do not seem to affect him” and “He was wearing shorts made of a sheet”. They did not agree on other points though such as “Was he young or old”, “Where did the arrow pierce his body”, “Did the action take place inside or outside”. To the artists and those in the church who commissioned the works, the differences did not matter. All that mattered was that a Christian Saint was shot with arrows for his faith and survived. I sometimes feel that some of those involved in Scaling Agile are focusing on the details that differentiate them rather than the common things that are important. In a particular context, each of the approaches to Scaling Agile may be more or less relevant.

Instead of focusing on the differences, we should focus on the core common elements and then identify the context where the appropriate approach is more appropriate.

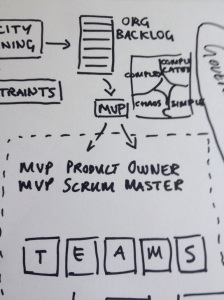

For the past year or so, @TonyGrout and I along with a bunch of other coaches have been trying to help a company to Scale Agile. This is the diagram and explanation I have been drawing for the past few months to help others understand the constraints and issues that we face.

Here is the description I’ve used to some success.

The scaled investment process starts with an Investment Decision Process which identifies an ordered list of investment possibilities ( I call it a list of Unicorn Horns as they are not realistic ). The ordering and naming of this list will be culturally specific. Do they use Weighted Shortest Finished Job, or Cost of Delay, or Business Cases, or just Hippos ( Highest Paid Person’s Opinion ). Who decides on the order? Do they call them MVPs or MVFs, or MMFs, or BVIs, or Investments or Stories or Bets or… The point is that the culture will determine the process to give a rough ordering to the list of potential investments. I will call them MVPs for this post.

The next step is to perform Capacity Planning for the coming period of time (typically quarterly). For this, the owner/promoter of each MVP contacts all of the groups* that need to provide input to deliver an MVP and asks them for a SWAG ( Sweet Wild Assed Guess ) in units of Scrum Team Weeks. The group puts as much effort as necessary into the estimate ( I recommend a solid five to ten minutes at most ). The group also provides their capacity for coming period ( Default is number of Scrum teams in the group multiplied by weeks in period times by 50% ). <Editor’s note. I realised I have not described this process in full on this blog. Watch this space>

* Group – Random name representing a group of one or more Scrum Teams that can work on a component or system within the organisation.

During Capacity Planning, the business investors ( whoever they are ) all come together and select items from the list of Unicorn Horns. For each item they select, they reduce the capacity of the impacted groups. This means the groups form the constraints rather than a generic mythical man month notion of organisational capacity. There are two outputs from this process. One, an ordered backlog for the organisation that all business investors agree to which provides direction to the teams. Two, a list of groups that are constraining the organisations ability to deliver. Note that the constraints are dynamic based on the kind of work involved, although most organisations have a few groups that are needed for pretty much everything. The backlog is the focus for those interested in delivery (short term). The list of constrained groups is of interest to those managing organisational liquidity (longer term).

The Delivery involves the teams adding the items to their team Backlogs. They build the things needed for the MVP which eventually results in a release. The release results in an outcome which feeds back into the investment decision process. This is all very standard Agile/Lean practice.

This is also where our experience differs from standard Agile Doctrine. The belief is that teams will self organise to ensure delivery of the MVPs. This is not what we have experienced. The teams deliver but the organisation does not.

To address this issue we proposed that each MVP is assigned a Product Owner and a Scrum Master who are responsible for its delivery ( I consider accountable and responsible as synonyms. Hopefully someone will explain the difference ).

The MVP Product Owner is responsible for the value delivery of the MVP (The Outcome). As the teams develop the MVP, the MVP PO will let them know what can be descoped without impacting the value delivery.

The MVP Scrum Master is responsible for the delivery of the MVP. They will ensure that the MVP is initiated properly so that everyone involved is aware of their expected contribution. They will ensure that an appropriate architecture is in place. They will set up and facilitate the MVP Scrum of Scrums and Retrospectives. They will ensure transparency on the MVP to ensure all involved can see the status.

The roles are similar to traditional Project Manager and Business Analyst roles with one huge and significant difference. They have NO authority. They influence using transparency and they rely on facilitation instead of direction. This is particularly important as they will develop influencing skills they need when they operate on areas where they do not have authority or influence such as in clients or other business units. They will be servant leaders. To do this, they will need tools to report and show progress.

So this is the software investment process in full. However, we also need to consider governance. A governor was a device on a steam engine that stopped it from blowing up. Two balls would spin around, their speed a function the steam pressure. If the steam pressure went too high, centripedal force would force them out causing them to rise, opening a va would release steam resulting in the pressure dropping. The purpose of governance of governance in an organisation is the same. It ensures that the risks within the system are managed effectively.

The risk managers of the system ( another role without authority other than the power of transparency ) is to ensure that the risks in the system are properly managed, and if the individuals do not have the appropriate skills and experience to manage the risks, ensure that they are provided with coaching so that they can. Consider people playing by a cliff. The risk manager would help to make them aware of the danger. They would show them the cliff and help them work out an appropriate risk strategy. If they wanted to build a wall but did not know how, the risk manager would introduce them to people who could teach them how. The risk manager would monitor the risk to ensure it continues to be managed. This is not about control, but more the appreciation that whilst we are playing in the field, we might forget about other things like risks and demons. Recasting definition of done as a set of risks to be managed allows the teams to find the best solution for their context whilst ensuring that the organisation is not exposed to known risks.

These risk managers are responsible for staff liquidity at the team, group and organisational levels. They are responsible for ensuring the overall investment portfolio is balanced as well as ensuring investment is occurring where it should rather than where it is easy. Rather than simply looking at status of a team, they are considering the health of the organisation as a whole as well as in parts.

One of the most critical risks to address is to ensure that the correct approach is taken to building the teams backlog for the MVP.

The Cynefin Framework is ideal ( and necessary ) for helping teams understand whether they should build the backlog iteratively ( in the complex domain ), or build independent solutions ( in the chaos domain ), or up-front ( in the complicated domain ) or simply let the team do its thing ( for obvious domain ).

A risk management and coaching based approach to delivery and governance is vital for Scaling Agile. It allows software development to fulling integrate with the entire business. However, Cynefin is the “Fifth Discipline” of Scaling Agile to the organisational level, without it, we will be doing the “Wrong things righter, er, The right things wronger”.. without it, we will be barking at the wrong tree.

So have I painted a process where we agree he was shot with arrows? Or does this invite discussion about how old or how good looking he is?

Thoughts?

Paintings of Saint Sebastian