WARNING: I’ve been lazy. It is quicker and easier ( and more fun ) to write this than hunt through the inter-web to find out if someone else has already written this down (I’m kinda assuming Troy or Liz have). I just need something to refer to.

I have been helping a waterfall Scrum* project adopt some Agile practices. Unfortunately the project had reached the point where there was a large backlog of manual User Acceptance Testing to be done. During the week I had a great conversation with the test manager for the project (The person producing the management reports). I realised that it was the perfect case study to understand the impact of the culture (risk aversion versus risk management) on a project.

The test manager categories all bugs as either Priority 1 or Priority 2. All of the Priority 1 & 2 bugs need to be fixed and signed off for the UAT to come to an end. Note that it is not desirable to time box the UAT on a Waterfall project as this will drive the wrong behaviours. The inspiration for this post came from a conversation on whether Priority 1 or 2 bugs should be fixed first.

Managing the UAT

In order to manage the UAT, the test manager needs two graphs.

- A burn-down of the tests to be run.

- A Cumulative Flow Diagram showing bugs as they are opened and closed.

The graphs below illustrate this point.

The UAT is over when the number of bugs closed line (Green) intersects with the number of bugs open line (black), assuming the zero outstanding test cases which have not reached the end of the test cycle.

There are two key milestones for a UAT.

- The most important milestone is reaching the end of the UAT (Obviously).

- The second important milestone is when all test cases have reached the end of their cycle, even if they contain bugs and are not signed off. The burn down for this is the blue dotted line. The reason that this is so important is that this represents the point at which further bugs are unlikely to be raised. The bugs discovered after this point are likely to be introduced as a result of fixing another bug.

The impact of Culture

So what does this have to do with culture?

In a risk averse culture, we avoid or ignore risk. We would want to demonstrate our competence as soon as possible. This would means we would want to demonstrate progress as soon as possible to gain the confidence of our managers.

In a risk managed culture, we would want to address the riskiest elements of the work first. We would be more interested in doing what is right for the organisation than demonstrating our own competence quickly.

Opening Bugs

So how would this impact the testing cycles?

If we are a risk averse tester we would want to demonstrate progress. We would want to show that we were getting through the test cases as quickly as possible.

Our focus would be on the pink line as this shows progress to the end of the UAT, the most important milestone.

To do this, we would pick those test cases that are easiest to sign off. We would pick those test cases that are least likely to contain bugs because they are quick and easy.

Furthermore, we would want to close bugs and sign-off test cases in preference to finding new bugs.

A risk managing tester would want to find bugs as soon as possible. Our focus would be on the blue dotted line. We would want to get to the end of all the test cases so that we would know most of the bugs in the system. For us, the most important thing would be to see the number of bugs raised flatten off as this allows us to manage the fixing of the bugs.

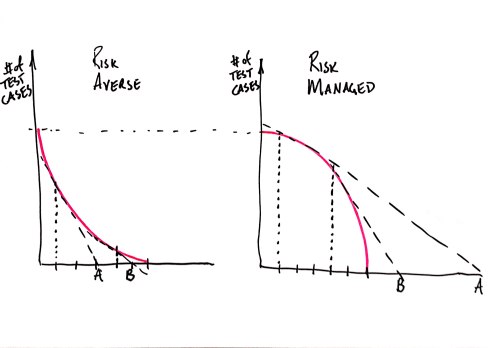

The graphs below demonstrate the impact of culture on the shapes of the lines. It is assumed that the same project with identical number of bugs discovered.

Both projects finish on the same date because the contain the same amount of bugs that require the same amount of effort to fix. However, consider the perspective of the test manager. In the risk managed culture, they know that they have discovered most of the bugs much earlier, and can take appropriate resourcing decisions.

Closing Bugs

So how would this impact the fixing of bugs?

If we are a risk averse developer we would want to demonstrate progress. We would want to show that we were getting through the bugs as quickly as possible.

Our focus would be on the green and pink line as this shows progress to the end of the UAT, the most important milestone.

To do this, we would pick those bugs that are easiest and quickest to fix and sign off. We would defer bugs that are complex, or take a long while to fix. We would potentially defer those bugs that block us reaching the end of the test cycles if they are complex or take a long time to fix.

Furthermore, we would want to close bugs and sign-off test cases in preference to finding new bugs as this looks better on management reports.

A risk managing developer would want to find bugs as soon as possible. Our focus would be on the blue dotted line (fixing bugs that block us from reaching the end of test cycle), and on fixing bugs that are most likely to result in further bugs, namely the complex and large bugs. The number of bugs closed and the number of test cases signed off would be of secondary importance.

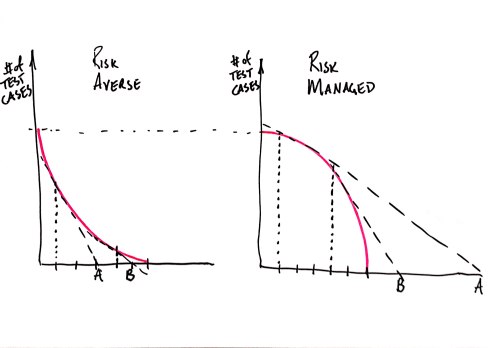

Assuming a risk managed tester, the below graph shows the impact of culture that the developer works in impacts on how bugs are fixed.

The risk averse developer would want to work on easier bugs that show them making progress. As a result there might be a delay (marked as “A” on the graph) on finding all the bugs in the system. This means that the risk managed developer would know with more certainty how much work needs to be done for “A” units of time.

The risk managed developer trades uncertainty at the start of the testing cycle for more certainty at the end of the test cycle. Conversely, the risk averse developer pushes more uncertainty (risk) to the end of the test cycle.

The risk averse behaviour makes it very difficult to manage resource loading for the test cycle whereas risk managed behaviour allows effective management of resource loading.

Simply put, risk managed behaviour gives more options than risk averse behaviour.

A final point on this, consider the projection (using a velocity measure) of the end of UAT. The graph below shows that the risk averse behaviour results in larger and larger time extensions at the end of the testing cycle ( A -> B ), whereas the risk managed behaviour results in smaller and smaller reductions of the time to the end ( A -> B ).

In conclusion, risk managed behaviour does allow management of testing, whereas risk averse behaviour does not. Anyone managing a testing cycle should understand that attempting to demonstrate early progress leads to a loss of management and control.

*These days it is really easy to find Waterfall projects. They are normally called a “Flagship Agile” project. The irony escapes them. A Culture Changing Agile Project would be called an “Agile Canoe” or “Agile Raft” or “Agile Rowing Boat”. Certainly not the flagship which is normally an aircraft carrier.